Classifying Sexual Abuse in Chats through the Bag of Words NLP Model

April 26, 2020

The problem

Child sexual abuse is a particular problem in academia. In the United States, “an estimated 10% of K–12 students will experience sexual misconduct by a school employee by the time they graduate from high school.” Shocking stories of sexual abuse at Penn State, University of Michigan, Ohio State and other universities are continuously revealed. In some of these cases, it’s taken decades of dedicated work by victims advocates and journalists for the abuse to come light.

The solution

The project team built a Natural Language Processing (NLP) model that will be of great help to classify predator individuals in online chats to prevent sexual abuse.

The dataset

The dataset that is used in this report is Pan12. Pan12 contains chat texts between the molesters and children.

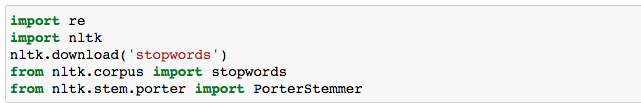

Importing the NLP packages: Preprocessing

The first major library that needs to be imported is “re” or Regular Expression. The second major library is the Natural Language Tool Kit (NLTK), which is another Python library that will help us to program with natural language. It comes with more than 50 other repositories such as WordNet, providing libraries that can perform tokenizing, stemming, classification, parsing, tagging, and semantic analysis.

The last one is PorterStemmer from the NLTK library, which provides us with stemming. Stemming is the process of linguistic normalization. It reduces words to their word roots for example organization, organizes, organized, organizer, and organize, all would become organized.

Importing NLP packages

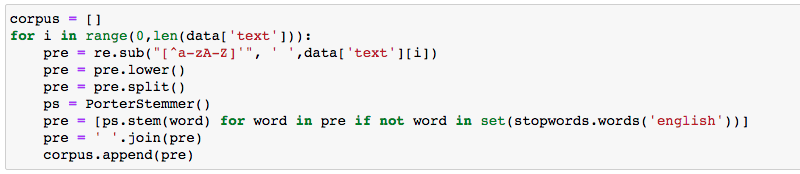

Now, we can apply all those changes.

NLP preprocessing

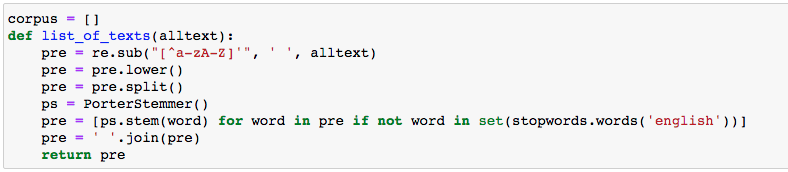

Also, a better approach, especially for large datasets, is to define a function like this and then calling a lambda function on it. Just be careful in our previous approach the output was an array. But by applying lambda function on the following, the output would be a column in the dataset.

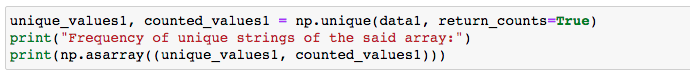

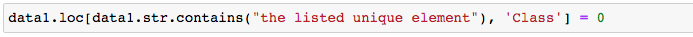

Labeling the important strings

This method works for datasets with a limited number of unique features. In the case of having a large number of features in a dataset, this method isn’t efficient. Future versions of this article will address this issue.

Finding most-used strings in the subsets

Labeling the important strings

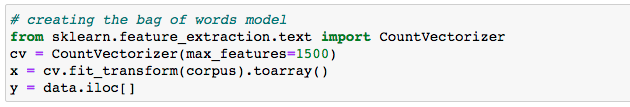

Then we extracted the features of our dataset. Features are specific words that are being used between the child and the potential molester. The model predicts whether communicated words are sexual or not.

The bag of words model is one of the good choices for us. In this model, a text (a sentence) is considered as a bag (multiset) of its words. It only considers multiplicity without considering the grammar or the order of the words.

To do so CountVectorizer from Sickit-Learn is our module choice, it provides us with a conversion of text documents to a matrix of token accounts. So, we would have a dictionary of words in an array, and if in each set of strings or bag of words any of these predefined texts or words are included, the corresponding number to that specific word in the matrix format of the dictionary would be 1, and 0 if not included.

Then we would fit and transform the function on our features part of our dataset.

Creating the NLP model

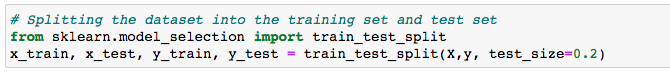

Then we would separate the dataset into train_features, test_features, train_labels, and test_labels by randomly considering 80% of values to train sets and 20% of values to test sets. X parameter represents those columns of the dataset having the features and y parameter represents their corresponding labels.

Making training and test sets

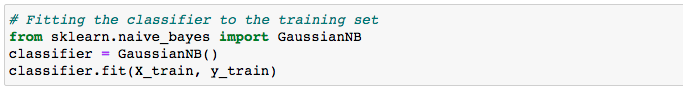

Introducing the Gaussian module and fitting it to our training features and labels.

Classifying training set by Gaussian method

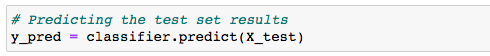

Following with the prediction of our labels based on our test_features.

Making predictions on the test set

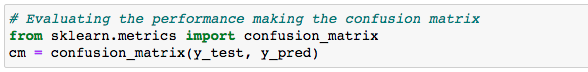

In the end, we would provide a confusion_matrix to check our predicted labels with our test_labels.

Making the confusion matrix to qualify our model

Conclusion and summary

In this report, we provided an NLP model to classify potential child molesters to prevent sexual abuse early on. We used BeautifulSoap to import the dataset in XML format, derived the raw text, and cleaned the dataset. Next, we introduced various NLP libraries and packages and applied them to our dataset, used the bag of words model, and output the confusion matrix.

You might also like

Want to work with us too?